The abrupt death of hitchBOT on August 1, 2015 shocked its fans. hitchBOT, the friendly hitchhiker robot, had traveled across Germany, the Netherlands, Canada and some parts of the USA. In Philadelphia, however, the robot was vandalized—a scenario he had not been programmed to deal with. And so his journey ended.

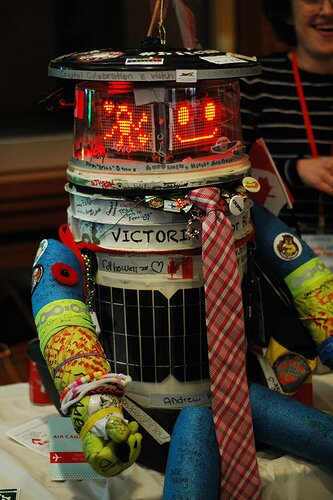

hitchBot

(foto credit: Kris Scott)

At least his physical journey did, but the “hitchBOT experiment” still lives on: on social media, shared stories about hitchBOT’s journey show the bond multiple individuals formed with the robot. Photographs taken all over the places explored by hitchBOT document positive emotions towards it (such as kids cuddling the friendly hitchhiker). And the question of why he was vandalized and how we deal with “robot murderers” comes up on different sites.

Indeed, hitchBOT’s journey raises multiple topics. A prominent issue revolves around how robots and individuals that interact with each other should behave. These ethical questions are not new but are applied to the newer development of so-called social robots. Social robots such as hitchBOT are robots with whom individuals bond mostly because of their human- or animal-like features (but the friendly version ones, not the Terminator-like ones). Individuals tend to anthropomorphize such machines more than they do with a less human/animal-like robots such as an autonomic vacuum cleaners. Such social robots are developing fast and are already employed as elderly caregiver assistances, as toys, or home companions.

While the hitchBOT experiment raises more questions of how we should interact with robots, other scenarios raise questions of how robots should behave. Since robots’ actions are programmed by engineers, the question of “behaving” turns into a question of “coding”. In other words, what range of actions should be encoded into the robots’ DNA—or the RoboCode? In our paper presented at the ACM Web Science Conference in Oxford this year, we focus our attention on a specific ethical issue: privacy and robots. We ask the question of whether there is a responsibility for robot engineers to consider the privacy implications of their devices and program them in a privacy-friendly way. Home companion robots that can monitor individuals and share daily life-footage of private information illustrate this area of concern. Due to the bonding with their users (almost like a friend) sharing such footage with others (such as the manufacturer of the robot) could raise serious privacy concerns. How to assure that a “robot-friend” behaves ethically like a friend would, according to social and legal norms?

We argue that to tackle such issues and deliberate on such fundamental questions a new class of authority becomes necessary. We call those to-be-established experts “RoboCode-Ethicists”. Basically, in our point of view, independent specialists or entities should dwell upon the developments in robotics and develop best practices to deal with upcoming issues. These issues can be—as we discuss it in our paper—the balancing of privacy implications of robots vis-à-vis their users. RoboCode-Ethicists need to be literate in both computing and engineering and social science. We need experts that think about the wider implications of robots in our society. And we need them now.

For more, read the paper ‘RoboCode-Ethicists: Privacy-friendly robots, an ethical responsibility of engineers?‘ by Christoph Lutz and Aurelia Tamò.

ethics, hitchBOT, privacy, robots, Technology